Clover’s Toy Box development: rendering Star Trek Voyager—Elite Force MDR models, Doom 3 MD5 models, browsing archives, and various issues I ran into.

- Miscellaneous.

- CPU vertex shader support.

- MDR Model Support.

- MDR Frame Interpolation.

- Model Memory Corruption?

- C++ Memory Corruption?

- Clover Resource Utility.

- MD5 Model Support.

- Toy Box renderer on a typical Windows PC.

- re: Can Turtle Arena go any faster?

1. Miscellaneous

I reworked vector length, normalize, distance, etc to replace 1.0f / sqrt( value ) with a rsqrt( value ) function using vrsqrtss assembly instruction. There was no noticeable performance difference though so I disabled it.

Use a CPU buffer with persistent mapped buffers. Direct access—even when never reading it—is slower than using a separate CPU buffer and memcpy()’ing to the persistent mapped buffer.

I finally committed the changes to cross-compile Qt model viewer for macOS from Linux. (I did it at the same time as for Maverick last year.)

I moved the website from it’s shared hosting location since 2009 to a VPS. This way I can have a VPS to test Toy Box server without increasing overall cost too much.

I got Hatsune Miku: Project DIVA Future Tone and Mega Mix+.

2. CPU Vertex Shader Support

I did a lot of work to support the ‘framework’ of generating vertexes for effects on the CPU but nothing to really show yet.

There can now be dynamic vertexes set up on the CPU for models and are uploaded all at once before drawing begins. It’s done on a per-mesh basis. If it’s a view dependent shader effect, it will be done separately for each scene it appears in. Otherwise it will be done once and reused in all scenes that specific object appears in. It prefers merging low vertex count surfaces (mainly sprites) to reduce drawcalls over reusing them in other scenes.

Vertexes that are passed in to be drawn are now stored in the entity buffer instead of copied to the frame’s vertex buffer directly. This allows for culling (still not implemented) and per-scene CPU vertex effects. I also had to change the surface vertex generation for all built-in primitive types (rectangle, circle, etc), CPU animated model mesh, and debug lines (for model bones, normals, etc) to write to entity buffer.

I’m considering reusing generated vertexes for the same model/material within the scene as well. The main concerning is how to handle lighting. It will ideally use GLSL for modern OpenGL but for OpenGL 1 I had planned to use CPU vertex shader for lighting like Quake 3 which prevents reusing the same geometry with different lighting, at least for OpenGL 1. I may look into OpenGL 1 light support as in Quake 3 models only use a single averaged light source anyway. I’m also a little concerned with performance/memory of scanning list of entities to see if the same mesh/material was already uploaded or having a specific list for this.

I still need to add support for multiple layers (uploading vertexes for each rendering pass). Mirrors/portals turned out to be broken already, so I’m currently ignoring that these changes would of broken them. I may of caused Doom-like multisided sprites to be invisible; I just noticed it anyway.

3. MDR Model Support

(Models are full bright, missing player shadow, and missing Quake 3 shader support.)

I added support for rendering MDR models in Toy Box renderer. MDR models are used for player models in Star Trek Voyager — Elite Force. I added support for Lilium Voyager renderer API to “Toy Box renderer for Spearmint”; it doesn’t support additional Elite Force render entity types, like bézier lines.

MDR is a skeletal model format based on Quake 3’s MD4 format. I also added MD4 support but no models exist due to incomplete implementation in Quake 3. (Unlike what the Wikipedia id Tech 3 article says, the MD4 format data structures in the Quake 3 source code defines a complete working format.) Just about every game based on Quake 3 uses a modified version of MD4. Adding MDR is kind of a base for adding other model formats in the future.

I started working on MDR and a few other MD4 based model formats last year. In the last three months I cleaned up MD4/MDR support and added support for animations. (I don’t load/use the lower level-of-detail meshes though.)

In Toy Box, MDR is effectively converted into IQM format at load but has to use linear matrix lerp for bone joint interpolation instead of quaternion spherical lerp (more on that in the next section).

The performance should be more or less the same as IQM in Toy Box due to using the same rendering code. This also means that MDR uses GPU bone skinning in Toy Box renderer, unlike ioquake3/Spearmint OpenGL2 renderer where only IQM has GPU bone skinning. Under OpenGL 1/CPU vertex shader, MDR also uses CPU bone skinning with optimized deduplicated influence list that I implemented for IQM; similar to what I added for IQM in ioquake3/Spearmint opengl1 renderer. (I haven’t looked at performance comparison though.)

I added support for model meshes with more than 20 bones to use CPU bone skinning to fix a few Elite Force single-player models. (This applies to IQM and other formats as well). (The 20 bones per-mesh limit for GPU bone skinning is due to OpenGL ES minimum required shader uniform variable size.)

A few models have vertexes with 5 joints influences and I drop the lowest influence to fit 4 influences for GPU bone skinning. Some (or maybe all?) of the fifth joint influences are 1%, so it seems like a mishaps rather than utilizing a feature. IQM doesn’t support more than 4 joint influences for a vertex and I’m not excited to add it. I’d like to store all the weights and use CPU bone skinning instead of dropping the influence but I haven’t yet.

4. MDR Frame Interpolation

MD4/MDR uses a 3×3 rotation/scale matrix for bone joints. IQM uses rotation quaternion and scale vector so I convert the MD4/MDR bone joint matrix into that form. This should be fine but interpolating bones has issues.

The MD4 format was kind of strange to me but is actually straight forward. The joints are absolute position matrix and vertexes contain the vertex position in bone local space for each bone that influences the vertex (single vertex, multiple positions). To get the animated vertex position multiply the influence bone joint matrix times vertex bone local space position, scale by influence weight, and add all these influenced positions for the vertex together.

IQM (and most newer formats) have vertexes in a bind pose (vertex has a single position for all influences) with relative hierarchy of joints which can be modified and then calculate pose matrixes that are the joint offset from the bind pose. (I call it a pose matrix, I’m not sure what the correct name for “pose matrix” is.)

I was initially working on another MD4 based format, SKB format used by Heavy Metal FAKK2 and American McGee’s Alice, and vertexes are indeed in bone local space. (There is no default bone pose in the SKB file and animations are in separate SKA files so it’s actually not possible to sanely display the mesh-only file by itself.)

MDR models in Elite Force however… have vertexes in a bind pose (duplicating the same position in all of a vertexes influences ‘bone local space’ positions) and ‘absolute bone joint matrixes’ in the file are actually pose matrixes to offset from the vertex bind pose. Using quaternion spherical lerp interpolation with pose matrixes is a problem. Arms pop off torso between some frames, among other animation issues. (Converting pose matrix back to absolute joint is not possible.)

I expected there could be differences with MDR joint interpolation but I thought quaternion spherical lerp would be better than linear matrix lerp. I replaced IQM linear matrix lerp with quaternion spherical lerp in ioquake3/Spearmint due to linear matrix lerp badly deforming the model (though if I remember correctly this was the absolute joint matrix, not the pose matrix).

I kind of wonder if MDR’s linear matrix lerp on the pose matrix has less skewing then linear matrix lerp on the absolute joint matrix. Though I would guess it’s impracticable when dynamic joint rotation is supported as it would have to calculate pose matrixes for two model frames and then lerp between them (instead of just lerping joints a few steps earlier in the process).

I made MD4/MDR in Toy Box use linear matrix lerp like Quake 3 / Elite Force instead of quaternion spherical lerp. This is the only MDR rendering difference compared to IQM in Toy Box. (Unlike Spearmint where every model format is completely separate, resulting in copy-and-pasting a lot of code for every model format I add.)

(I still store MD4/MDR bone joints as rotation quaternion and scale vector instead of 3×3 matrix which is potentially a problem if skewed/shear matrixes are used. And yes, still missing MDR lower level-of-detail meshes.)

5. Model Memory Corruption?

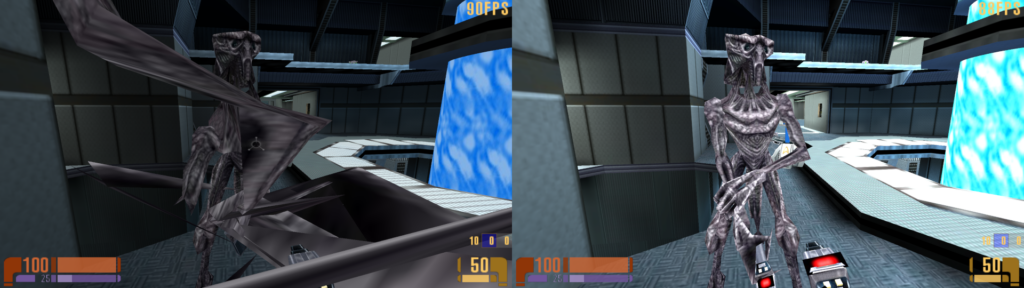

Left: Broken, Right: Not broken.

I ran into an issue that seemed like memory corruption. It wasn’t. The CPU bone skinning code expected per-mesh bone reference indexes but my loaded MDR had real joint indexes and caused out of bounds bone joint matrix access. This resulted in vertexes with NaNs (drawn at the center of the screen) and far away (which is not captured well in a single screenshot).

joints[ meshBoneReference[ vertexInfluence->boneIndex ] ] ].

meshBoneReference[] array size is 20 but vertexInfluence was incorrectly joints[] indexes.

IQM models were set up correctly but remapping influence bone joint indexes was unintentionally lost when I copy-and-pasted the code for MDR. The IQM code remapped bone joint indexes in-place but the code for MDR did not so it referenced the original real bone joint indexes.

The per-mesh bone references are only needed for GPU bone skinning (to only upload the referenced bones to the uniform buffer because OpenGL ES has a smaller uniform buffer that may only fit 20 bone joints). I ended up switching CPU bone skinning to use real bone joint indexes which removed unneeded bone reference look up (so I had to change IQM influences).

I ran into this while testing Toy Box renderer for Lilium Voyager (Star Trek Voyager — Elite Force Holomatch). Species 8472 player model had the issue. I think because it has more bones than other player models.

6. C++ Memory Corruption?

After I fixed the Elite Force bone joint issue I immediately ran into another issue that seemed like memory corruption. It wasn’t. A struct was initialized to 0 and then somehow crashed trying to free a non-NULL pointer in that struct. (I had issues trying to watch the memory in GDB because it was a class member and it went out of scope; but evidently the answer for that was watch -l <local variable>.)

I had re-applied a bunch of unfinished code I carry out of tree and Qt C++ GUI “Clover Resource Utility” crashed in changes related to loading file archives. The next day I figured out that my FS_FileSystem struct was different sizes in C++ and C resulting in C reading/writing past the end of the shorter C++ struct.

I thought it was related to field alignment adding padding between fields (which didn’t really make sense why it would be different between C++ and C). The field order was 32-bit, 64-bit, ... so there was 32-bit padding inserted to make the 64-bit field be 64-bit aligned. I moved a 32-bit field before the 64-bit field and that removed extra padding in C and made the sizes the same in both C++ and C.

However looking at offsets of all fields in the struct, I found that my boolean type was 8-bit in C++ and 32-bit in C. The order of 32-bit, 32-bit, 64-bit, boolean, boolean, 64-bit incidentally had padding in C++ that made the struct the same size with 8-bit and 32-bit boolean but reading/writing the booleans would be wrong. I spent some time searching for why my boolean enum size would be different in C++. I found -fno-short-enums GCC compiler flag but it didn’t fix the issue.

I forgot that my boolean type used C++ bool (8-bit) in C++ and enum (32-bit) in C resulting in different data sizes. I knew this could be an issue but I only thought about it in the context of reading/writing files and networking, not C++ vs C ABI… Making C++ use enum like C fixed the issue.

7. Clover Resource Utility

A year ago I made a prototype for Clover Resource Utility that could view files in archives and extract files. I finally integrated it. The file browser side-panel is resizable and hide-able and now supports directories in addition to archives. Unlike the prototype, the application supports opening regular files as well as archives.

I added support for file passed on command-line to reference a file in an archive (e.g., “clover-resource-utility pak0.pk3/maps/q3dm1.bsp”) which will load the archive and display the file.

I’ve done a lot for loading and displaying content but there was one area I ignored; freeing content. Models and their textures were not entirely freed so eventually Resource Utility silently failed to load any new models as the GPU vertex buffers were full. This has been been fixed by freeing all model GPU data when a new model is loaded in Resource Utility. Materials/textures are now referenced counted and freed if no longer used.

I added support for viewing text files.

8. MD5 Model Support

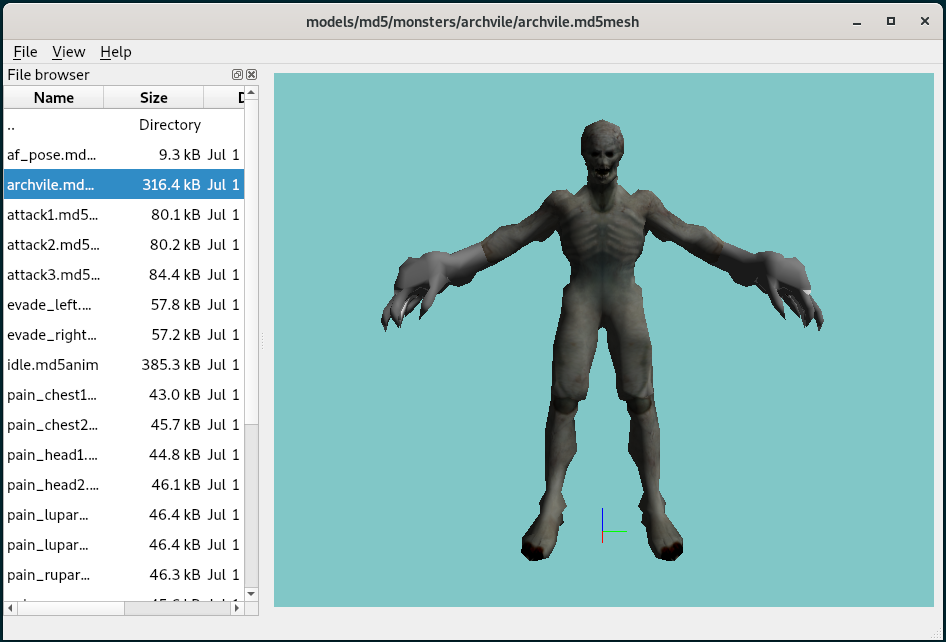

When working on various MD4-based model formats last year, I also added support for loading meshes from Doom 3 MD5 .md5mesh models. I ran into .md5anim files crashing Resource Utility when testing archive support.

I fixed .md5anim crashing the .md5mesh handler (missing MD5 joints/meshes) and added support for loading joints from .md5mesh files so it possible to move vertexes using the skeleton. This was more difficult for me than loading .md5anim files because I’m dumb and it took while to convert absolute joints in .md5mesh to be relative to parent joint.

I had to fix a few issues with rendering skeletal model with no animations.

I added loading .md5anim animation files. There isn’t a way to connect it to a .md5mesh model in Resource Utility so it can just draws the bones. I use the same function to load .md5mesh and .md5anim even though there isn’t much shared behavior. (This will be relevant when I loaded files based on content instead of file extension as both start “MD5Version”.) I also added a weird feature of allowing combined .md5mesh + .md5anim file so I could test that animation works correctly.

I haven’t done a full review but several MD5 models have vertexes with 5 to 7 joint influences. So like with MDR, I need to add support for more than 4 influences per-vertex.

9. Toy Box renderer on a typical Windows PC

I tested Toy Box renderer for ioquake3 on Windows for the first time. On a typical Windows PC running Windows XP with an NVIDIA GeForce 6800 graphics card (2004, OpenGL 2.1). I fixed some issues to get it to run and disabled slow code so it runs faster but still leaves a lot to be desired. (It does have a way better frame rate than the 2008 Intel integrated graphics I used in 2019-2020.)

Compiling WinMain() into a DLL failed to link. WinMain() wrapper in my Windows platform code was used to call main(). It’s not needed for the renderer DLL but instead of having DLL opt-out, I made Toy Box client opt-in. I had to reworked handling CFLAGS in Makefile as Toy Box renderer DLL also used it. I changed from setting flags specific for server, client, and Qt utility (reusing client stuff for renderer DLL) to be flags for CLI executable, GUI executable, GUI DLL, and Qt executable. (GUI here basically means SDL but unusable Wii port doesn’t use SDL.)

After it compiled, there was a fatal error at start up. Wide character string functions for copy and concatenate wcscpy() and wcscat() were changed to “secure” wcscpy_s() and wcscat_s() due to me compiling with _FORTIFY_SOURCE but the secure functions don’t exist in msvcrt.dll on Windows XP. (I wasn’t specifically aware it was changing function calls.) From what I read linking with mingwex library might add the missing functions (I haven’t tested) but I just implemented simple wcscpy() and wcscat(). (I’m just copying a couple constant strings for Windows UNC prefix “\\?\” or list files suffix “\*”.)

After it ran, I was greeted with the screen flashing black as it tried different OpenGL contexts until it found a working one (the window has to be created and destroyed for each try). After going through that a few times, I was avoiding looking at the screen. I got rid of the flashing by adding SDL_WINDOW_HIDDEN flag to the created windows and calling SDL_ShowWindow() after a supported OpenGL context is found. This also seemed to be faster.

Initially timedemo four ran at 44 FPS while ioquake3’s opengl1 renderer was 260 FPS. Not good. Testing r_overbrightBits 0 (to disable post process and speed up rendering) broke rendering and only went up to 50 FPS. Overbright uses a framebuffer to render to a texture and then draws to default framebuffer with additional brightness applied. The console printed default framebuffer color, depth, stencil bits as all 0. I later realized the issue was that the default framebuffer depth buffer was not cleared. I use framebuffer depth/stencil bits to determine what needs to be cleared, mainly to shut up debug mode/context GL_KHR_debug warnings about clearing stencil when there is no stencil.

I used the following to get the bits (GL_ARB_framebuffer / OpenGL 3.0):

glGetFramebufferAttachmentParameteriv( GL_FRAMEBUFFER, GL_DEPTH, GL_FRAMEBUFFER_ATTACHMENT_DEPTH_SIZE, &depth );

I looked into it a little and found that GL_EXT_framebuffer_object doesn’t have this. The Windows XP computer was turned off; I wasn’t sure whether it had the EXT or ARB framebuffer object extension but the handling for EXT was clearly wrong. I fixed GL_EXT_framebuffer_object to use glGetIntegerv( GL_DEPTH_BITS, &depth ) like already used for OpenGL ES 2.0.

However the Windows XP computer does have the ARB framebuffer object extension. Forcing it to use glGetIntegerv( GL_DEPTH_BITS, &depth ) worked. It’s valid except for OpenGL Core profile so I added a fallback if all color, depth, stencil bits are zero to use glGetIntegerv(). This fixed the rendering issue due to not clearing depth buffer but didn’t really improve performance.

It may be that using glGetFramebufferAttachmentParameteriv() for default framebuffer requires OpenGL 3.0 but I haven’t checked the OpenGL spec for it. I did find people talking about old NVIDIA driver being broken and not supporting getting default framebuffer bits using attachment parameter and in Core profile also not supporting getting bits from glGetIntegerv(). I added a fallback for the fallback that if the bits are still all zero, to go ahead and clear depth/stencil anyway. Not clearing depth/stencil buffers is after all to just shut up debug warnings that aren’t enabled by default.

I tested using only OpenGL 1.x features and it still ran timedemo four at 50 FPS. (So it’s not modern features / shaders messing it up.) I later remembered that my curved surface code is slow as hell and only my beast Ryzen 5800x can handle it. So I disabled curved surfaces and it moved up to 60 FPS. Also disabling overbright bits jumps the timedemo result to 170 FPS.

I tested with some things disabled (world, entities, HUD, …) but it’s still not super obvious what’s slow. Disabling drawing the world changes timedemo from 170 FPS to 260 FPS (comparable to opengl1 renderer but you know, missing the world). Disabling other things were much a smaller change. It seem like I need to improve several things, a lot, to match opengl1 performance.

As I was testing I noticed a couple rendering issues, vertex lit world surfaces are displaying lightmap (but vid_restart fixes it…) and in q3dm4 random color is getting applied to non-world models based on player location(?). (Oh joy. Is this memory corruption?)

ioquake3 timedemo four on an old Windows XP computer with an NVIDIA GeForce 6800 (2004, OpenGL 2.1). (Higher frames per second is better.)

- 3.8 milliseconds – 260 fps – opengl1

- 5.8 milliseconds – 170 fps – Toy Box renderer for ioquake3

- 50 milliseconds – 20 fps – opengl2

My Toy Box renderer is missing features so it’s doing less work. I disabled poor performing features (curves, overbright). I’m using modern features that improve performance (I tested only using OpenGL 1.x features and it’s slower) but it’s still no match for Quake 3’s opengl1 renderer on this hardware.

10. re: Can Turtle Arena go any faster?

A day or two after the post Can Turtle Arena go any faster?, I decided to actually look at improving performance.

Turtle Arena connected to server (Toy Box renderer for Spearmint, map team1, 63 IQM bots) has gone from 2.5 milliseconds per-frame (400 frames per-second) to 1.5 milliseconds (666 FPS). Compared to 1.4 milliseconds (714 FPS) for 63 MD3 bots—which was unaffected by these changes.

It turns out when testing performance it helps to compile with optimizations enables instead of a debug build (~2.5 -> ~2.0 msec).

It also helps to review where it’s slow and do that less. I added skeleton pose caching to Toy Box renderer so it only sets up each bot’s legs and torso models pose once. Before the skeleton was set up by each call to get an attachment point (which is done multiple times) and when the model is added to the scene. (~2.0 -> ~1.5 msec.)

Using IQM models is in the range of 1.3 to 1.7 milliseconds (mainly 1.5) and overlaps with MD3 model’s range of 1.2 to 1.5 milliseconds (mainly 1.4). Watching over the whole Turtle Arena team1 map, IQM seems to be the same speed or faster than MD3 but IQM is slightly slower when following a bot in the midst of sea of bots. IQM and MD3 are closer in performance than I expected to get.

Compiling with optimization enabled improved Spearmint renderers as well.

Turtle Arena (map team1) connected to server:

Client/server running on Ryzen 5800x with NVIDIA GTX 750 Ti.

Before: No optimization enabled – 63 IQM players:

2.5 milliseconds – 400 FPS – Toy Box renderer for Spearmint

12 milliseconds – 80 FPS – opengl2

20 milliseconds – 50 FPS – opengl1

Now: Compiler optimizations enabled + Toy Box skeleton pose caching – 63 IQM players:

1.5 milliseconds – 666 FPS – Toy Box renderer for Spearmint

10 milliseconds – 100 FPS – opengl2

12 milliseconds – 80 FPS – opengl1

The skeleton caching is a hacky solution to support skeleton models with the Quake 3 renderer API. (Quake 3 uses model frame numbers instead of passing skeleton poses around.) The number of skeleton poses to cache must be greater than the number of skeletal models attached to the player so legs and torso models don’t get pushed out of the cache. If all models were skeletal it would need to cache like 8 models or something—legs, torso, head, weapon, weapon barrel, CTF flag, Team Arena persistent powerup, etc. and it would need to be increased if mods added more.

The Toy Box renderer API (that isn’t exposed to Spearmint game logic) supports getting the model skeletal pose (for checking attachment points and applying dynamic changes) and then specify drawing the model with the pose so the skeleton is only needs to be set up for the object once per-frame.