Clover’s Toy Box development: A 10th anniversary, Turtle Arena performance, ANGLE, OpenGL 1.1, and Android.

- April 13th.

- Can Turtle Arena go any faster?

- OpenGL on Windows.

- OpenGL 1.1 fixed-function rendering.

- Android.

1. April 13th.

April 13th was the 10th anniversary of the release of Turtle Arena 0.6 (April 13th 2012).

Turtle Arena 0.6 was planned be the final release under the title Turtle Arena before replacing the turtles with new original characters (including the character Clover) and renaming the game to EBX (Extraordinary Beat X). I was interested in releasing it as a commercial game. I later picked up the OUYA and GameStick Android micro-consoles to try to market it on.

None of that happened but Turtle Arena 0.6 introduced the Clover red team icon.

In Turtle Arena 0.7 (2017) I changed it back to a red sai icon. The Clover icon can be re-enabled in Turtle Arena 0.7 using the console commands “g_redteam Clover; g_blueteam Shell;”. (Open the console using shift+esc.)

2. Can Turtle Arena go any faster?

I made some minor performance improvements to Clover’s Toy Box renderer which I felt pretty good about. I was interested to see how the performance in Turtle Arena compared to the Clover’s Toy Box 2021 report.

When testing Turtle Arena (running on the Spearmint engine using Toy Box renderer for Spearmint, map team1 with 63 IQM bots) the frame times were inconsistent. It was hitting lows of what I reported (100 FPS) but also much higher at some points (200 FPS) while just sitting with the camera still and viewing the whole level. I checked the 2021 version of “Toy Box renderer for Spearmint” and it behaves the same. So in essence, none of my recent improvements are really making a difference in this situation and I was conservative at reporting the performance.

Looking at the CPU usage (using Linux perf profiler program), most of the time is spent in the server’s functions SV_AreaEntities() and SV_AddEntitiesVisibleFromPoint(). Pausing the 63 bot players (using bot_pause 1 cvar) improves the frame time a lot and solves the very inconsistent frame times. (The frame time spiked every time the server runs the game logic at 20 Hz.) I think SV_AreaEntities() is called by melee attacking. Part of the problem (unconfirmed) may be that the level is one giant room is there is only one “area” and it has to check all objects to see if they’re in the attack box.

It seems like I’m kind of at the point where in order to improve the frame rate, I would need to optimize the server and game logic rather than the renderer. Though to take an easier route for testing, I ran a Turtle Arena dedicated server with 63 bots and then connected to it. That way the client can just render it without running the server-side game logic. (Run on GNU/Linux with AMD Ryzen 5800x and NVIDIA GTX 750 Ti.) Higher frames per-second (FPS) is better.

Turtle Arena (map team1)

Connected to server – 63 MD3 players:

1.4 milliseconds – 714 FPS – Toy Box renderer for Spearmint

9 milliseconds – 111 FPS – opengl2

17 milliseconds – 59 FPS – opengl1

Connected to server – 63 IQM players:

2.5 milliseconds – 400 FPS – Toy Box renderer for Spearmint

12 milliseconds – 80 FPS – opengl2

20 milliseconds – 50 FPS – opengl1

The 2021 report – when running the server and game logic in the same process:

Single player – 63 MD3 players:

8 milliseconds – 125 FPS – Toy Box renderer for Spearmint

25 milliseconds – 40 FPS – opengl2

50 milliseconds – 20 FPS – opengl1

Single player – 63 IQM players:

10 milliseconds – 100 FPS – Toy Box renderer for Spearmint

33 milliseconds – 30 FPS – opengl2

66 milliseconds – 15 FPS – opengl1

MD3 format has pre-calculated vertex positions for all frames and interpolates vertexes (in a straight line) between model frames. IQM is a skeletal animated format which requires more computation (setting up bone transforms and matrix multiply of bone influences on each vertex) but it has better visual result (vertexes truly rotate when interpolated instead of a straight line between model frames), less memory usage, and allows for dynamic joint rotation.

Based on this and other testing it appears that the average frame time for the server logic is 3.5 to 7 milliseconds (explaining the inconsistent frame rate), average for the client logic is ~1 millisecond, and average for Toy Box renderer is ~0.4 milliseconds (MD3) or ~1.5 milliseconds (IQM).

Single player team1 map with 63 MD3 bots is 8 milliseconds per-frame (125 FPS). The renderer time is 0.4 milliseconds so I assume with no rendering it would only improve to 7.6 milliseconds per-frame (131 FPS). Only a 6 FPS increase if no rendering? That’s pretty fast rendering. Improving IQM would be nice though.

The dedicated server is running on the same computer. I suppose this would be is a reason to run the server-side logic simultaneously in a separate thread from the client. I haven’t worked with multi-threading but for Turtle Arena (Spearmint) this is probably difficult due to a lot of shared infrastructure between the client and server. Toy Box client/server is functional but does nearly nothing and I tried to make more things self contained (like the virtual filesystem) so it might be easier to try to make it run the server in a separate thread.

The strange thing is that the time delta isn’t consistent in all the renderers. When running the server and game logic; Toy Box renderer takes ~7 milliseconds longer, opengl2 takes ~17 milliseconds longer, opengl1 takes ~35 milliseconds longer.

The results for the opengl1 and opengl2 renderers are based on the Quake 3’s FPS displayed on the HUD which is rounded to whole milliseconds, averaged over a short period, and not very accurate. (For Toy Box renderer’s “714 FPS” the Quake 3 HUD was flashing between 666, 800, and 1000 FPS.) Toy Box renderer measures time in microseconds and averages over a 2 second period (with a graph so I can see inconsistent frame times).

In the future, I should measure all renderers with microsecond precision to get more accurate frames per-second / frame time. It’s kind of pain to integrate displaying the information.

tl;dr (too long; didn’t read)

- Turtle Arena is slow with 63 bots unrelated to rendering.

- Clover’s Toy Box renderer is fast.

- I could use better performance measuring for other renderers.

- I wrote a long post that performance hasn’t visibly changed since the last post.

3. OpenGL on Windows.

The OpenGL API (open graphics library application programing interface) allows software to use the graphics card or integrated graphics to improve rendering performance. OpenGL is an open standard that runs on multiple platforms. Microsoft has there own Direct3D library which is the native graphics API on Windows.

The OpenGL 1.1 API (1997) hasn’t been relevant since like the year 2001. Applications frequently require OpenGL 3.0 (2008) which has a more modern feature set. Even GZDoom, an enhanced version of a 1993 MS-DOS video game, requires OpenGL 3.0. Graphics cards that only offers OpenGL 1.1 are unlikely to be used in the present day.

However OpenGL support on Windows is kind of terrible. The OpenGL driver Microsoft provides only implements OpenGL 1.1. (To their credit for backward compatibility, it still exists.) The graphics hardware developers (NVIDIA, AMD, Intel) can make a OpenGL driver available that supports newer versions (and better accelerate OpenGL 1.1).

However for Windows in a virtual machine or for many computers with Intel integrated graphics running Windows 10; only OpenGL 1.1 is available. There is some OpenGL software renderers, such as LLVMpipe, and Microsoft’s OpenCL and OpenGL compatiblity pack for Windows 10—implemented using Direct3D 12—but I failed to get either to work in a Windows 10 virtual machine. There may be unofficial workarounds for physical Windows 10 computer with Intel graphics as there was drivers for older Windows versions.

I’ve been unable to run my software in some situations due to missing support for OpenGL 1.1. Spearmint 1.0.3 (requiring OpenGL 1.2) and Maverick Model 3D 1.3.13 (Qt 5’s QOpenGLWidget crashes the application on OpenGL 1.1).

Adding OpenGL 1.1 support to Toy Box renderer would not be the best way to solve Windows’ poor OpenGL support. Libraries such as ANGLE can convert OpenGL ES 2.0 (OpenGL for Embedded Systems 2.0) (2007) to Windows’ well supported native graphics APIs Direct3D 9 (2002) and Direct3D 11 (2008). Google Chrome and Mozilla Firefox use ANGLE for WebGL (OpenGL in a Web-browser) on Windows.

OpenGL ES 2.0 has more modern features than OpenGL 1.1 and is already supported by Toy Box renderer. I was able to add a check if it’s OpenGL 1.x to prefer OpenGL ES 2.0 (ANGLE) instead.

When using SDL 2.0.x for window/OpenGL context creation, you just add the ANGLE libEGL.dll and libGLESv2.dll into the application directory (“just” but there is no official downloads, build from source or do like I did and find a random build someone made using GitHub Actions), create a dummy window with SDL_WINDOW_HIDDEN flag and check the OpenGL version and destroy the window, and then if it was OpenGL 1.x tell SDL to use OpenGL ES 2 and create your game window and get OpenGL functions using SDL_GL_GetProcAddress(). Done. (Implementing a OpenGL ES 2.0 compatible renderer is an exercise for the reader.)

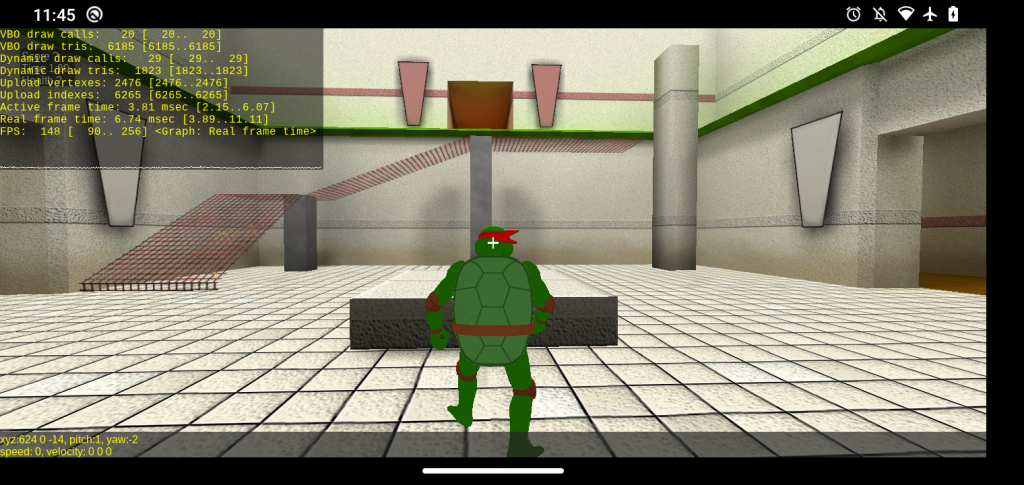

Now Toy Box “just works” in a Windows 10 virtual machine and even exposes OpenGL ES 3.0 and supports almost all the OpenGL extensions that I optionally use (except GL_EXT_buffer_storage one of the five extensions needed for persistent mapped buffers). The virtual machine ran Toy Box with Turtle Arena’s Subway map and turtle player model at 40 frames per-second at 720p. It’s not great (compared to 1500 FPS on GNU/Linux natively) but a whole lot better than failing to run at all.

I kind of wonder if I should have OpenGL 2.x on Windows default to ANGLE as well. It likely offers more features. In some ways, it’s kind of easy to understand why most “PC games” (e.g., Windows games) target Direct3D—Windows native graphics API—opposed to OpenGL. It will be there and work.

4. OpenGL 1.1 fixed-function rendering.

OpenGL 1.1 used fixed-function rendering where there is a bunch of options to change to control rendering (such as the color or being affected by a dynamic light with a set origin and color). Modern OpenGL uses OpenGL Shader Language (GLSL) shaders that give free form control to write instructions in code which opens up many more possibilities for effects.

When I started developing Toy Box I was targeting OpenGL ES 2.0 with GLSL shaders and I put most additional features behind a check for the OpenGL version and/or extension that added the functionality. I kept telling myself not to add OpenGL 1.x (fixed-function) support as it’s a waste of time. Naturally before I tried to get it to run on ANGLE, I went ahead and got it running on OpenGL 1.1. It ran at 7 frames per-second in a Windows 10 virtual machine. That’s worse performance and less available features than using ANGLE.

The four missing pieces for running on OpenGL 1.1 were fixed-function rendering (instead of GLSL shaders introduced in OpenGL 2.0), client-side vertex arrays (instead of vertex buffer objects, OpenGL 1.5), mipmap generation (OpenGL 1.4), and rendering multiple layers for a material (instead of multi-texture, OpenGL 1.3).

Getting it working wasn’t too difficult. Albeit with terrible mipmap generation (scaled down textures to use further away) and I haven’t added an additional draw pass for Quake 3 BSP lightmaps (as alternative to multi-texture) because code refactoring is a pain. BSP currently just uses vertex light instead of lightmaps.

The main problem was how to implement a custom “fixed-function vertex shader” (akin to a GLSL vertex shader) to handle dynamic vertex effects like model animation, lighting, texture scrolling/rotation, etc.

I had fixed-function rendering check if the geometry/material if it needs vertex shader effects when it’s about to be drawn. If so, I set it up in a temporary vertex array and drew it as a client-side vertex array. (This follows the general idea of Quake 3’s OpenGL 1.1 renderer.) I had fixed-function rendering just disable all vertex buffer object support. (The source data before applying fixed-function vertex shader was stored in the vertex buffer and would be needlessly uploaded.) This makes it unusable in some modern situations but the point is supporting legacy fixed-function rendering.

It seemed like I was adding a large maintain burden; the OpenGL 1.1 API support isn’t much but the whole “fixed-function vertex shader” that needs to be kept in-sync with the GLSL shaders is. Though part of my motivation for adding fixed-function rendering is that I want to run my 3D software on the Nintendo Wii and it’s “GX” API is similar to OpenGL 1.1.

While I was working on Toy Box’s OpenGL 1.1 fixed-function rendering, it occurred to me that Quake 3’s “fixed-function vertex shader” for dynamic vertex effects is actually just “CPU vertex shader” and could be streamed to the GPU like any other dynamic vertexes (2D menu, HUD, sprites, etc). It should of been obvious but there is sort of an ideology in OpenGL tutorials/discussion that passing as little data to the GPU as possible and putting everything in GLSL shaders is required for modern rendering and will automatically give you great performance.

This is kind of the first time I feel like I have an interesting solution for improving the Spearmint renderer situation. Implement fixed-function features (alpha test, clip plane, etc) using a basic GLSL uber shader with permutations so only needed features enabled. Use Quake 3’s CPU vertex shader to set the vertex positions, texture coords, and colors. GLSL shader support could be added for common cases to improve performance (light-mapped geometry, model lighting, etc) but other features could work the same as they do now in the opengl1 renderer (less regressions and no slower than it already is).

Combined with persistent mapped buffers—falling back to vertex buffer objects—it could be used in all modern situations (OpenGL 3.2 Core Profile (macOS), OpenGL ES 2, WebGL) and open the door to adding features that require GLSL shaders such as GPU skeletal animation. I wouldn’t say I have a full vision of how Spearmint opengl1 itself could be modernized yet.

For instance, I buffer all vertex data for the frame in Toy Box before issuing any draw calls for best performance. However I need to figure out how to only generate vertex data when the render entity (model, sprite, etc) is visible in a 3D scene (or mirror/portal) and in some cases generate separate vertexes for multiple scenes (like sprites). Though this mainly seems like changing the architecture of when vertexes are generated and storing references for drawing later.

Now I’m moving Toy Box’s “CPU vertex shader” support from only being usable by OpenGL 1.x (low-level, non-VBO compatible) to being usable by modern OpenGL. My intention is to implement features in the “CPU vertex shader” before GLSL vertex shaders so I can focus on the feature and then later (if needed) how to properly implement/optimize it for GLSL and pass the data into GLSL shaders. In particular I plan to add support for Quake 3 materials (.shader files) which expose a lot of flexibly that is rarely used, if at all. Accuracy for weird edge cases is more important than speed.

And that’s the story of how “OpenGL 1.1 fixed-function rendering”, a perceived pointless maintenance burden that no one will use, became a core feature that no one will use. The CPU vertex shader will be used but OpenGL 1.1 is unlikely to be used. In particular due to preferring ANGLE over OpenGL 1.1 on Windows.

As a side note, I also added OpenGL ES 1.1 support as it’s very similar to OpenGL 1.x.

5. Android.

Previously

Back in 2012 I wanted to rename Turtle Arena and replace the characters and release it as a commercial game. The PC gaming space seemed crowded and game controller support (for local multiplayer) did not seem easily accessible / well supported. I wanted to release it for the announced Android micro-consoles, such as the OUYA and GameStick, which utilize game controllers with support for local multiplayer. I also got a Motorola Droid phone (Android 2.3.3) as well because there was an ioquake3 port to it. The new characters models and expanding the content for commercial release never happened.

I tried to port Spearmint (the engine Turtle Arena runs on) to Android in 2013 using SDL 2. I got Spearmint to build for Android but running it—from what I remember—just had a black screen on Motorola Droid and immediately exited on the OUYA. I never solved it. I couldn’t get text output from the game or render anything. The renderer port from OpenGL 1 to OpenGL ES 1 was also untested. The SDL OpenGL ES test program worked so it was something wrong with my Spearmint port.

I have OpenGL ES 1.1 and 2.0+ working in Clover’s Toy Box but hadn’t revisited Android.

Present day

I haven’t felt like working on the CPU vertex shader support for a while (which needs to be done as a new core feature for “OpenGL 1.1 fixed-function renderer”…). One day I decided, hey why I don’t I try to port Clover’s Toy Box to Android?

I used the SDL 2 Android README file as a guide. I installed Android Studio (an IDE for developing Android apps). In 2013, I only had command-line tools. In Android Studio I used the SDK manager and installed the latest Android SDK (software development kit, for Java), NDK (native development kit, for C compatibility), and emulator. I used SDL’s build-scripts/androidbuild.sh script to set up SDL’s OpenGL ES test for building on Android.

./androidbuild.sh org.libsdl.testgles ../test/testgles.cI opened the SDL project in Android studio (“SDL/build/org.libsdl.testgles/” directory). It went through some project processing and then sat at “configuring” with no additional information for several minutes. I gave up waiting. This was off to a great start. I tried using the command-line (“cd SDL/build/org.libsdl.testgles/ && ./gradlew installDebug”) to see if it would give any more information. It did not. I tried again in Android Studio. It was always stuck at “configuring”.

At some point as I was messing with it I saw a message like “NDK at ./ndk/21.xx did not have a source.properties file”. Installing the NDK had completed without error but I decided to look at the NDK files. It turns out I installed the latest NDK (24) but the gradle build system version listed in the SDL android project defaulted to using NDK 21. The “configuring” step was silently downloading NDK 21 in the background.

I deleted the NDK 21 directory and used the Android Studio’s SDK manager to install NDK 21. The download speed was terrible so I disabled and re-enabled Wi-Fi. The download speed increased. After it finished, it was able to “configure” and I was able to build the SDL OpenGL ES test app.

For whatever reason instead of trying to run it in the Android emulator, I tried to run it on my moto e (2019) phone. After some slightly confusing documentation and difficultly finding options in Android Settings, I managed to enable USB debugging which is required for installing/launching the application from Android Studio.

I was able to launch and run the SDL OpenGL ES test app on my moto e (2019) phone. In my brief time messing with it I found that it starts out running as fast as possible and if you switch to another app and back to SDL OpenGL ES test app it runs slower (and later confirmed it’s 60 FPS v-sync).

Later that Day

Equipped with my new knowledge for building and running an SDL application on Android with wonky v-sync, I set out to build Clover’s Toy Box for Android.

Android NDK uses CMake or custom (GNU Make) Makefiles for the application’s build system. (I prefer to use GNU Make.) NDK Makefile system has you define “modules” that are a static or dynamic library. The SDL library lists it’s source files and headers path, that it needs to link to Android NDK’s cpufeatures library, and that’s it. It’s really quite simple.

The application to run (SDL OpenGL ES test) is done the same way. A module defining a “libmain.so”. The SDL Java glue loads the libmain.so to call the main function (later I found that it actually calls SDL_main). I needed to build Toy Box into a “libmain.so” library.

In the Toy Box Makefile, I list all the source files (opposed to the generated object files like ioquake3/Spearmint) and set up libraries similar to NDK’s modules (with dependencies, CFLAGS, exported CFLAGS for executable/library using it, etc). It seemed like a fairly good match for setting it up as NDK modules.

I started out trying to build it all as one module (missing library private CFLAGS and per-file CFLAGS for enabling particular SIMD instructions…). I ran into issues with missing fseeko() and ftello(). On some architectures, such as ARM and x86, file access for getting/setting the read/write position is a signed 32-bit value which limits to 2GB files. There is a compile flag _LARGEFILE_SOURCE that enables using 64-bit offsets.

However support for 64-bit offset was not introduced until Android 7.0 (API level 24) and I am targeting Android 4.1 (API level 16)—the oldest that recent Android NDK and SDL support. Instead of ignoring _LARGEFILE_SOURCE and using 32-bit offset for fseeko() and ftello(), the NDK headers disable the functions resulting in compiler errors. So okay, I disabled _LARGEFILE_SOUCE in my compile flags.

minizip 1.1 was still failing to build. It turns out minizip source code was also adding _LARGEFILE_SOURCE. Disabling it was then asking for fseek64() / ftell64() which are also not present. I found that I could add USE_FILE32API to the compile options to use fseek() instead of fseek64(). I changed minizip’s ioapi.h so that USE_FILE32API would disable adding _LARGEFILE_SOURCE.

libpng leaves implementing a function to check if ARM NEON (SIMD) extension is supported as an exercise for the user. Mine failed to compile because getauxval() function isn’t supported by Android 4.1 (API level 16). In the past I used SDL’s SDL_HasNEON() but I stopped so I could use the same libpng build for SDL (the game) and Qt (model viewer) applications. I looked at how SDL handled it… which is complicated and also uses the NDK cpufeatures library (Apache-2.0 license, that isn’t mentioned in the SDL Android README). I went ahead and enabled using SDL_HasNEON() on Android as I don’t plan to build CLI or Qt applications for Android.

I ran into strange Freetype build errors so I added it as a separate NDK ‘static library’ module that used the correct Freetype private CFLAGS. Alright, the build continues. opusfile fails to build. Missing fseeko() / ftello() again. Like, okay I’m over this so I just added -Dfseeko=fseek -Dftello=ftell to the compile flags so it calls the correct functions. I ran into more compile issues and just started disabling libraries. Eventually I ran into missing linking to SDL and after adding that, it built libmain.so successfully.

Did I try to test it? Nah. Instead I decided to rip out using NDK module stuff and just have my Makefile set the compiler path (CC, CXX), compile flags (CFLAGS), and linker flags (LDFLAGS) that the NDK was adding. Instead of finding where it was in the NDK Makfiles, I just built the SDL library from the command-line with “./SDL/build-scripts/androidbuildlib.sh V=1” for verbose output and copied the information (for all four CPU architectures it’s built for). After that I was able to get Toy Box building with all libraries enabled. (And yes, this is not the best from the point of view different NDK versions may add different compile flags.)

New problem. How do I add the libmain.so I built (for arm, arm64, x86, x86_64) into the Android package (.apk)? In the Android NDK documentation I found a module type for pre-built shared library. Perfect. Except I could not get it to work. I was stuck here for a while.

I eventually found on Stack Overflow that someone said it doesn’t work and to use jniLibs in the gradle project to set a directory that contains the libraries. SDL already added it (add libraries in the “app/jni/libs/<ARCH>/” directory). I added the libraries (as “armebai/libmain.so”, “arm64-v8a/libmain.so”, etc) and they are added to the APK. Great!

I tried to run it on my moto e (2019) phone. It showed a message box with the point being “SDL_main not found”. C programs have a main() function. SDL uses a define to rename main to SDL_main. However, I don’t use this. Windows needs special handling; the entry point is WinMain() and it needs to call our main() and convert arguments from UTF-16 to UTF-8. I do it myself so that it works for the dedicated server without SDL. SDL_main does nothing on Linux and macOS so I haven’t had a reason to use SDL_main system and link to SDLmain library. I changed Toy Box main to be SDL_main on Android. However it still erred missing SDL_main. I was stuck here for a while.

After some searching I found the cause (from someone in an SDL bug report saying this solution didn’t work for them). In my compile flags, I disable exporting functions and symbols by default (“-fvisibility=hidden”). So I had to exported the SDL_main function by adding “__attribute__((visibility (“default”)))” before “int main( int argc, char **argv )”. I was then able to run Toy Box on Android (without assets). This is the title screen with white/gray square cursor, rotating white/grey quad, and red, green, blue lines for model axis.

It had been about 12 hours since I decided to try to get it working on Android. (I did some other things in the middle though.)

Several days later

Drawing a white/gray texture and red, green, blue model axis lines is great but I really need fonts. So I decided to add support for loading data files from the Android application package (.apk). The SDL Android README tells what directory to add your files into. I was able to get files into the apk easily. Now, how to read them?

SDL has functions for getting the Android internal and external storage paths. I added those to my virtual filesystem (VFS) search paths. Except it didn’t work. On the Android device, I manually browsed to the internal path using a Files app and it was empty. I was expecting the assets that were added to the apk.

Referring back to the SDL Android README it says “you can load [apk assets] using the standard functions in SDL_rwops.h“. SDL_RWops (read/write operations) is something I’ve never paid much attention to. I hadn’t heard of a reason to use it and I also don’t want file access to require SDL. Digging into the SDL source code (SDL_android.c), I found that APK assets don’t have a directory name and require reading using Android-specific AAssetManager instead of fopen() / open().

Fortunately I already have an abstraction layer over file access (instead of it being spread over the whole code base and using libraries built-in file support). I did this so that Windows can use the Win32 API and everything else can use POSIX file API (fopen(), fread(), fseeko(), fclose(), etc). SDL_RWops does this as well but I have some extra options.

I made it so that Android uses SDL_RWops if opening a file in a fake “/apk-assets/” directory (so I can sanely handle the APK assets paths) and will pass a relative path to SDL_RWFromFile() so it can be read from the APK. For other paths it uses my file opening code (with options to not overwrite existing file and make the file only be readable by this user account) and passes the FILE handle to SDL_RWFromFP() to allow SDL_RWops to use the file I opened. On Android, I have all file reading, seeking, etc is go through SDL_RWops so I don’t have to deal with handle being a FILE or a SDL_RWops.

I didn’t expect file access to be complicated. Though admittedly SDL_RWops does the hard part. However SDL doesn’t have a way to list files in directories so I’ll need to implement that myself in order to find archives to load. I haven’t tried to add a pk3 (zip) archive into the APK yet either. It sounds like something needs to be done to not compress the archive in the APK.

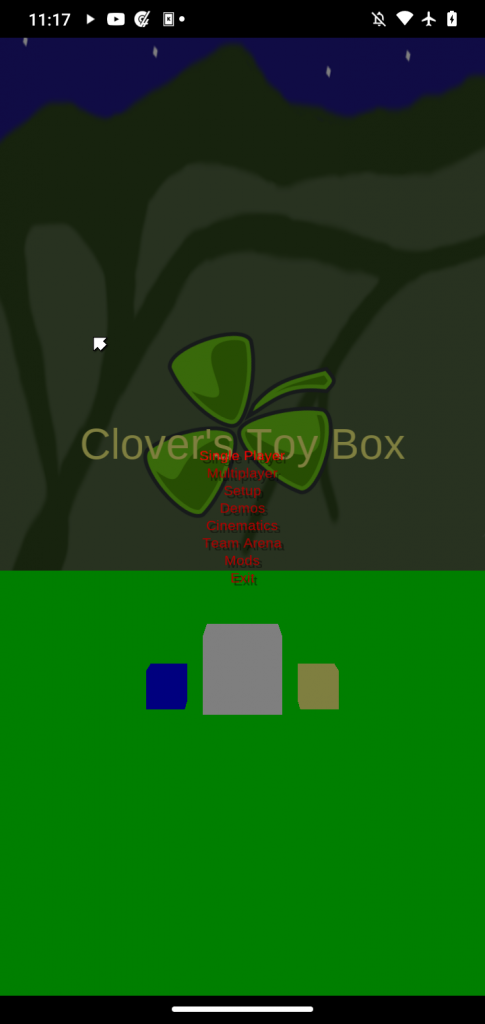

After adding network permissions to the AndroidManifest.xml and fixing a general issue in my game protocol TCP parsing, I got Clover’s Toy Box to run on Android—connecting to network servers and single player.

Black bar on right side due to camera notch.

It also runs on the OUYA (2012, Android 4.1). It runs at only 30 FPS with basically nothing happening so it’s going to be hard to make it usable. Let alone with four player splitscreen.

There is a lot of work needed to actually make it work well on Android. (Ignoring the fact the game logic barely exists. All it can do is move player and collide with map and it doesn’t do those well.) There are things pointed out in the SDL Android README (don’t use exit(), handle app suspend/resume, etc), for touch screen it would need on-screen controls, and the Android keyboard opens at startup due to calling SDL_StartTextInput() at start up instead of when a text field is selected.

I haven’t focused on making Clover’s Toy Box user friendly on desktop computers yet either. It requires using a keyboard to open the console in order to start or join a game and most “options” are things I changed in the code and recompile it. For testing on Android, I just hard code joining a server and recompile it… (The Toy Box server is just running on my desktop computer.)

Clover’s Toy Box now runs on GNU/Linux (desktop, Raspberry Pi, PinePhone), macOS, Windows, web browsers, and Android.

6. Summary

In this article:

- I remember the 10 year anniversary of Turtle Arena 0.6.

- I took another look at “Toy Box renderer for Spearmint” in Turtle Arena.

- I explained how to use ANGLE on Windows with SDL 2 in order to use OpenGL ES 2.0 instead of OpenGL 1.1.

- I went over porting Clover’s Toy Box renderer to OpenGL 1.1 and that a CPU vertex shader can be used with modern OpenGL.

- I detailed the initial port of Clover’s Toy Box to Android.

Comments

One response to “Clover’s Toy Box 22.04”

As always, a MUST READ article. Thank you for the detailed explaining of the OpenGL 1.1 fixed-function situation.